In what was the most anticipated quarter this earnings season, Nvidia far outpaced lofty expectations on the top and bottom lines. Even better was a big revenue guide and a broader vision from CEO Jensen Huang that reinforced the notion that companies and countries are partnering with the AI chip powerhouse to shift $1 trillion worth of traditional data centers to accelerated computing. Revenue for its fiscal 2025 first quarter surged 262% year-over-year to $26.04 billion, well ahead of analysts’ forecasts of $24.65 billion, according to data provider LSEG, formerly known as Refinitiv. The company had previously guided revenue to $24 billion, plus or minus 2% — so that was a huge beat. Adjusted earnings-per-share increased 461% to $6.12, exceeding the LSEG compiled consensus estimate of $5.59. Adjusted gross margin of 78.9% also beat the Street’s 77.2% estimate, according to market data platform FactSet. The company had guided gross margins to 77%. plus or minus 50 basis points. On top of the strong results, Nvidia announced a 10-for-1 stock split. Although stock splits don’t technically create value, they do tend to have a positive impact on the stock. The company said the split is to “make stock ownership more accessible to employees and investors.” We commend Nvidia for doing this and will continue to press other companies to do the same. Nvidia most recently split its stock in July 2021 on a 4-for-1 basis. In after-hours trading, it was little surprise to see Nvidia shares surging. Nvidia Why we own it : Nvidia’s high-performance graphic processing units (GPUs) are the key driver behind the AI revolution, powering the accelerated data centers being rapidly built around the world. But this is more than just a hardware story. Through its Nvidia AI Enterprise service, Nvidia is in the process of building out a potentially massive software business. Competitors : Advanced Micro Devices and Intel Most recent buy : Aug 31, 2022 Initiation : March 2019 Bottom line What air pocket? Coming into the quarter, it sounded like the only thing that could hold Nvidia back was a product transition-related slowdown from customers delaying orders of the H100 and H200 GPUs (graphics process units) in anticipation of the superior Blackwell chip platform. As you can see from Nvidia’s big beat and upside guide, that was far from the case and demand is expected to exceed supply for quite some time. Should this narrative form again, here’s a good thing to remember for next time so that these concerns don’t shake you out of a strong long-term thesis: Jensen explained on the post-earnings conference call that customers are still so early in their build-outs that they have to keep buying chips to keep up in the current technology arms race. And technology leadership is everything. “There’s going to be a whole bunch of chips coming at them and they just got to keep on building and just, if you will, performance average your way into it. So that’s the smart thing to do,” the CEO said. More broadly, we didn’t hear anything Wednesday evening to change our long-term view about how Nvidia is the driving force behind the current AI industrial revolution. Here’s how Jensen explained the shift that’s happening: “Longer term, we’re completely redesigning how computers work. And this is a platform shift. Of course, it’s been compared to other platform shifts in the past, but time will clearly tell that this is much, much more profound than previous platform shifts. And the reason for that is because the computer is no longer an instruction-driven only computer. It’s an intention understanding computer.” Jensen went on to mention how computers not only interact with us, “but it also understands our meaning, what we intend that we asked it to do, and it has the ability to reason, inference iteratively to process and plan and come back with a solution.” The billions and billions of dollars being spent on accelerated computing is why we own Nvidia for the long-haul and are not trying to trade it back and forth on every headline. By the way, another bearish narrative we often hear is that the custom chips all the big cloud companies are making are a threat to Nvidia’s leadership. Jensen doesn’t see it that way because his platform system has the highest performance at the lowest total cost of ownership. It’s an unbeatable value proposition. NVDA YTD mountain Nvidia YTD The strong results and outlook, upbeat commentary, and stock split were sending Nvidia shares roughly 6% higher to above $1,000 per share for the first time ever. However, we don’t think the gains end here. We’re increasing our price target to $1,200 from $1050 and maintaining our 2 rating , meaning we view it as a buy on pullbacks. Quarterly Results Growth was driven by all customer types, but enterprise and consumer internet companies led the way. Large cloud companies represented a mid-40% of data center revenue in the quarter, so when you see companies like Oracle and Club names Amazon , Microsoft and Alphabet raise their capital expenditure outlooks, understand that a lot of those dollars will flow Nvidia’s way. And, there’s a good reason for it. On the call, Nvidia CFO Colette Kress estimates that for every $1 spent on Nvidia AI Infrastructure, a cloud provider has an opportunity to earn $5 in GPU instant hosting revenue over four years. One customer call out in the quarter was Tesla , expanding its training AI cluster to 35,000 H100 GPUs (graphic processing units). Nvidia said Tesla’s use of Nvidia AI infrastructure “paved the way” for the “breakthrough performance” of full self-driving version 12. (Full self-driving, or FSD, is the way Tesla markets its high level of driver-assisted software.) Interestingly, Nvidia sees automotive as a huge vertical this year, a multi-billion revenue opportunity across on-premise and cloud consumption. Another highlight was Meta’s announcement of Llama 3, its large language model. It was trained on a cluster of 24,000 H100 GPUs. Kress believes that as more consumer internet customers use generative AI applications, Nvidia will see more growth opportunities. The Tesla and Meta clusters are examples of what Nvidia calls “AI Factories.” The company believes “these next-generation data centers host advanced full-stack accelerated computing platforms where the data comes in and intelligence comes out. Nvidia also pointed out that sovereign AI has been a big source of growth. The company defines sovereign AI as a “nation’s capabilities to produce artificial intelligence using its own infrastructure, data, workforce, and business networks.” Kress expects sovereign AI revenue to approach the high single-digit billions of dollars this year from nothing last year. Looking ahead, Nvidia sees supply for the H100 improving but is still constrained on the H200. Even with the transition to Blackwell, Nvidia expects demand for Hopper for quite some time. “Everybody is anxious to get their infrastructure online, and the reason for that is because they’re saving money and making money, and they would like to do that as soon as possible,” the company said. In other words, customers will take whatever they can get. But look for Blackwell revenue later this year, perhaps in a very meaningful amount. The company explained manufacturing of Blackwell has been in production and shipments are expected to start the fiscal 2025 second quarter, ramp in the third, and customers will have full data centers stood up in the fourth quarter. Software was mentioned more than two dozen times on the conference call. And taken together, Nvidia said on the prior quarter’s call that its software and services reached an annualized revenue rate of $1 billion. They are high-margin, recurring revenue businesses, which continue to be key watch areas in future quarters. As for China, the company said it started to ramp up new products specifically made for the region that don’t require an export control license. The U.S. government has put restrictions on sales of the fastest chips for fear they will be used by the Chinese military. However, it doesn’t like China is expected to be a driver of revenue like it was in the past because the limitations to Nvidia’s technology have made the environment more competitive. Guidance The company’s fiscal second quarter guide should dismiss the market’s concerns that some sort of AI spending “air pocket” was forming. For the current Q2, Nvidia projected revenue of $28 billion, plus or minus 2%, above consensus estimates of $26.6 billion Adjusted gross margins are expected to be 75.5%, plus or minus 50 basis points, above estimates of 75.2%. Capital returns Nvidia increased its quarterly dividend by 150%, which is nice but the annual yield is insignificant to the investment case. More impactful is the $7.7 billion of stock the company repurchased in fiscal Q1. (Jim Cramer’s Charitable Trust is long NVDA. See here for a full list of the stocks.) As a subscriber to the CNBC Investing Club with Jim Cramer, you will receive a trade alert before Jim makes a trade. Jim waits 45 minutes after sending a trade alert before buying or selling a stock in his charitable trust’s portfolio. If Jim has talked about a stock on CNBC TV, he waits 72 hours after issuing the trade alert before executing the trade. THE ABOVE INVESTING CLUB INFORMATION IS SUBJECT TO OUR TERMS AND CONDITIONS AND PRIVACY POLICY , TOGETHER WITH OUR DISCLAIMER . NO FIDUCIARY OBLIGATION OR DUTY EXISTS, OR IS CREATED, BY VIRTUE OF YOUR RECEIPT OF ANY INFORMATION PROVIDED IN CONNECTION WITH THE INVESTING CLUB. NO SPECIFIC OUTCOME OR PROFIT IS GUARANTEED.

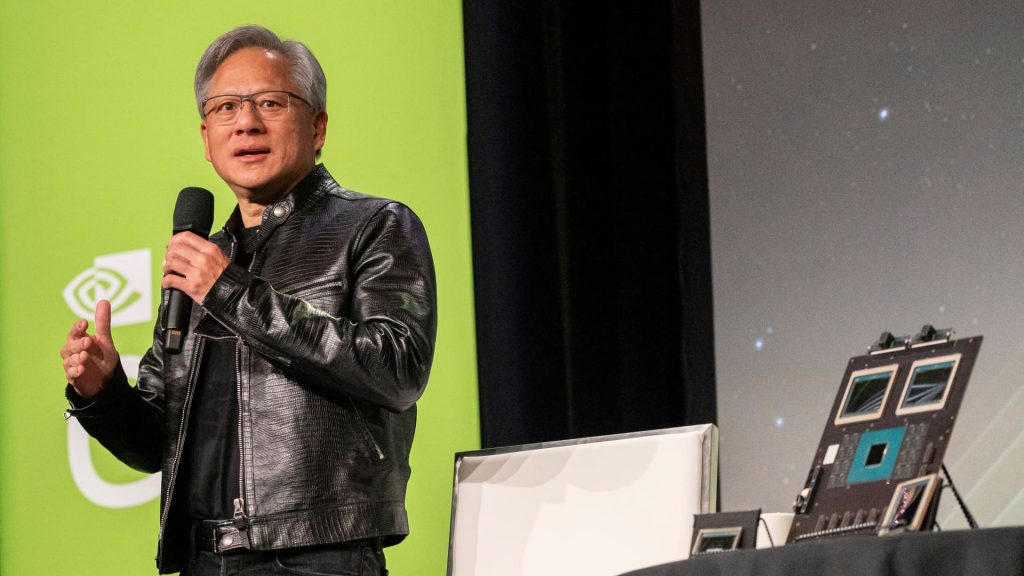

In what was the most anticipated quarter this earnings season, Nvidia far outpaced lofty expectations on the top and bottom lines. Even better was a big revenue guide and a broader vision from CEO Jensen Huang that reinforced the notion that companies and countries are partnering with the AI chip powerhouse to shift $1 trillion worth of traditional data centers to accelerated computing.

Read the full article here